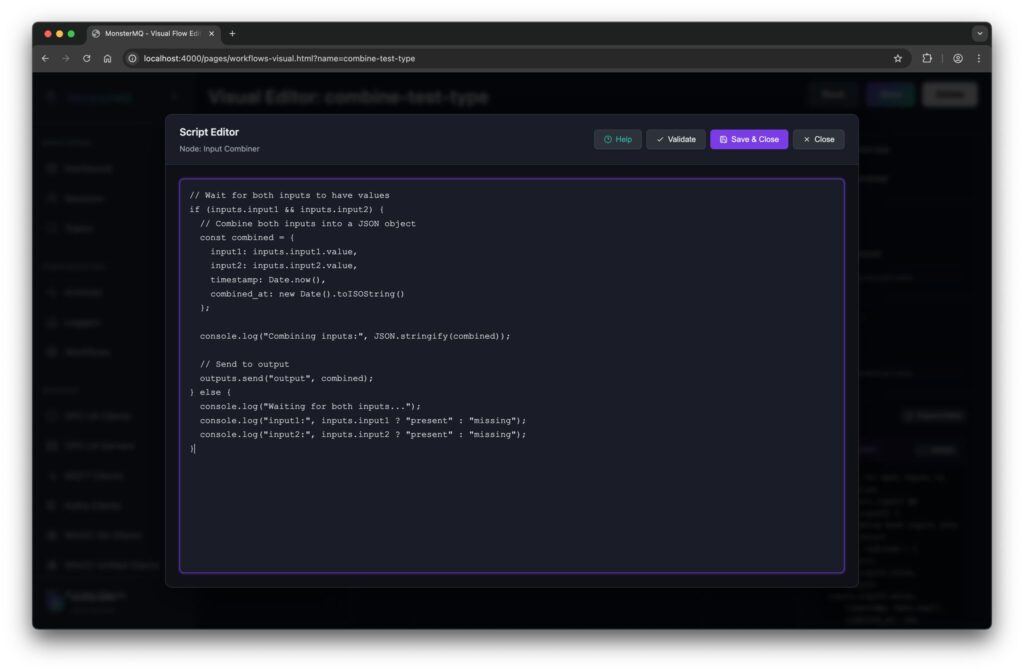

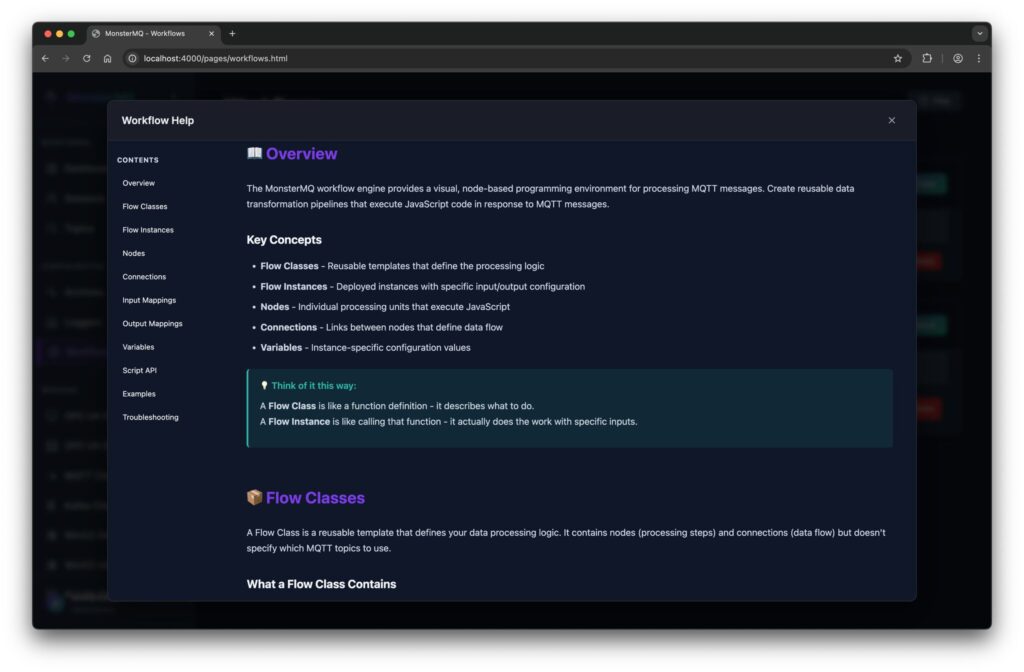

I’ve added a first version a workflow engine that lets you define custom business logic directly inside the broker:

🔹 React on multiple input topics

🔹 Write your logic in JavaScript, more functions

🔹 Publish results to output topics

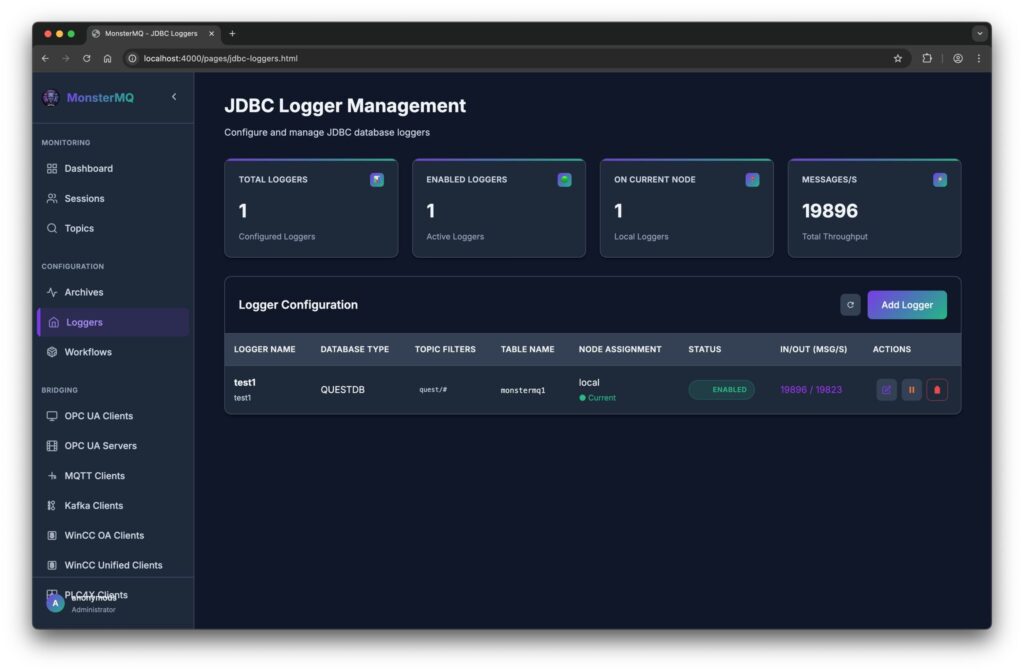

💾 And there is now a JDBC-based Data Logger with store and forward.

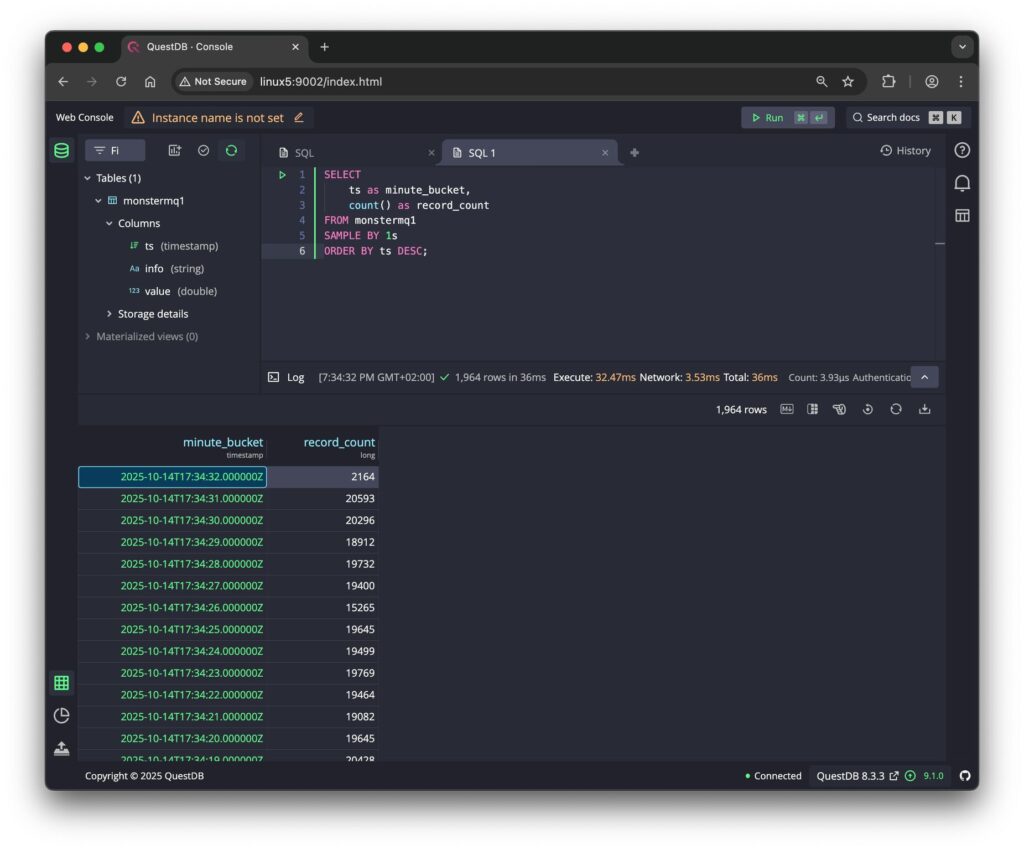

It currently supports JSON payloads, with a JSON schema, you can define how fields map to columns in your database tables.

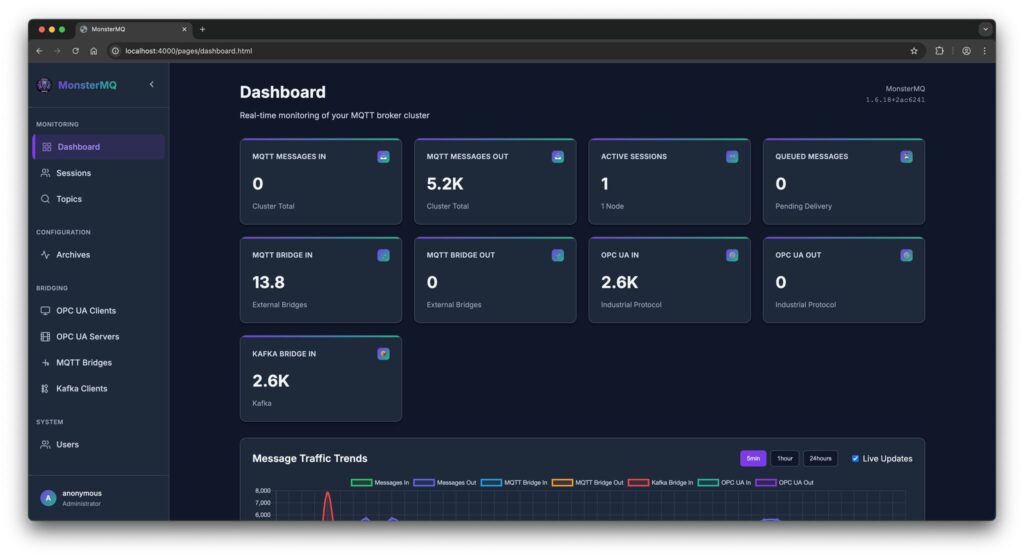

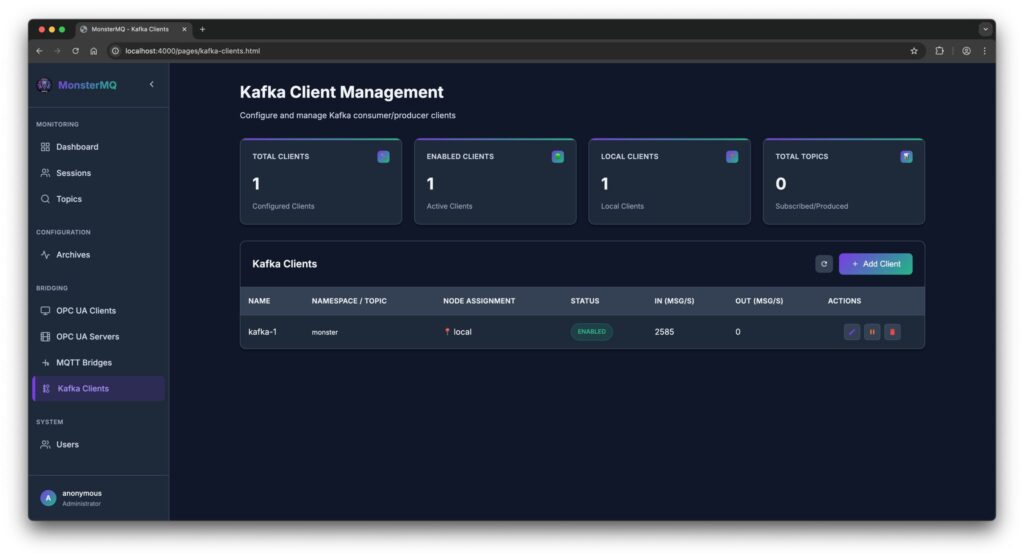

See the pictures, a simple test with 30 Python clients publishing topics to the broker. It stores 20 kHz of data into a QuestDB instance without issues.

It’s a simple way to process and store data where it flows – no need for external services or function frameworks.

👉 MonsterMQ.com 👉 give a star on GitHub if you like it. thx.