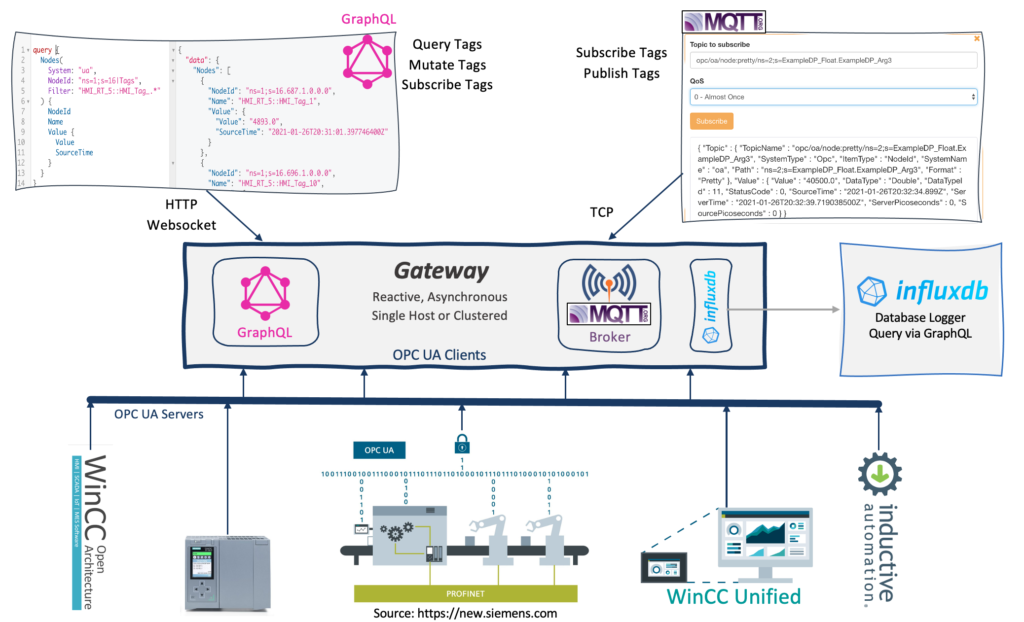

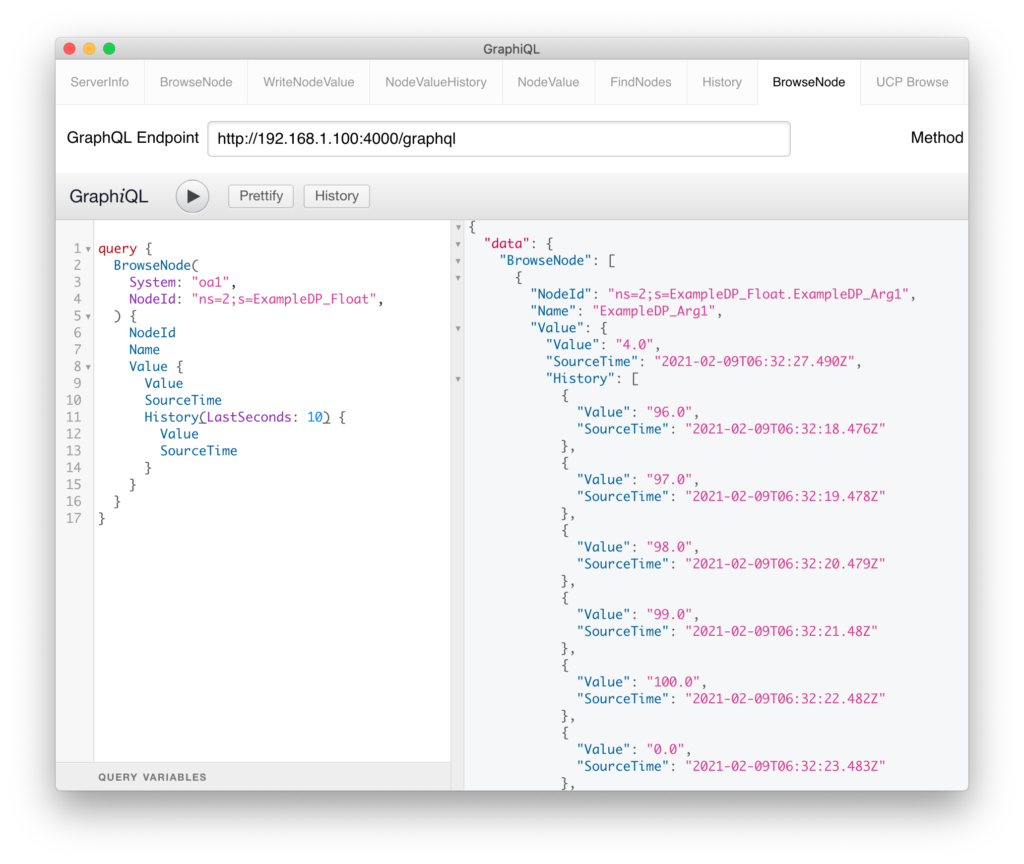

Here is an OPC UA gateway with which you can access your OPC UA values via MQTT and GraphQL (HTTP). If you have an OPC UA server in your PLC, or a SCADA system with an OPC UA server, you can query data from there via MQTT and GraphQL (HTTP). In addition, the gateway can also log value changes from OPC UA nodes in an InfluxDB. The archived values can then also be queried via GraphQL.

Runs anywhere: Linux, Windows, Mac, …

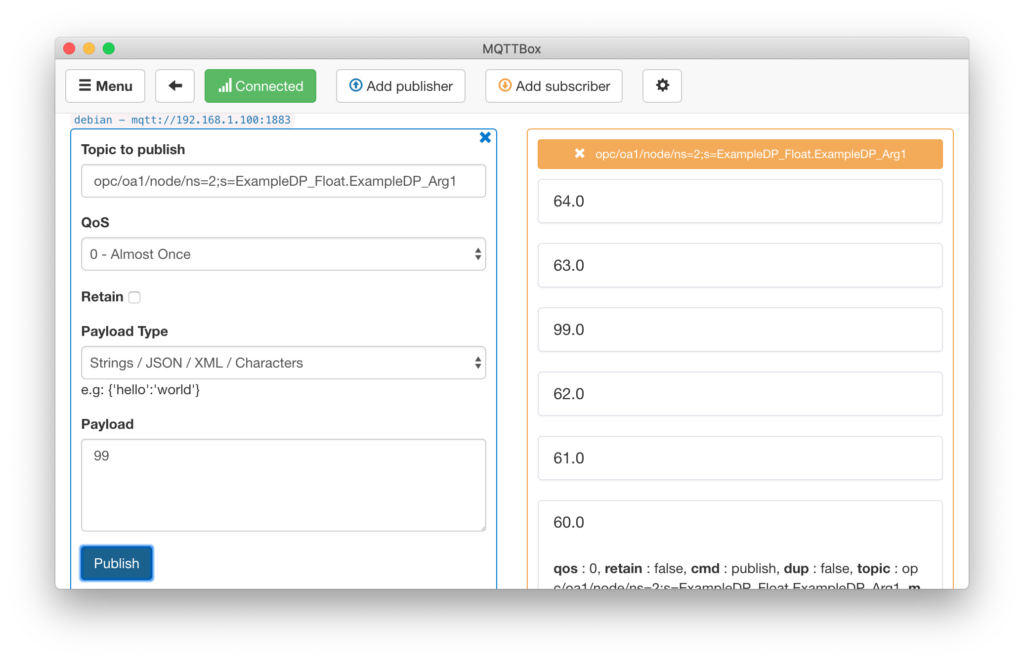

Example MQTT Client:

Example MQTT Topics:

opc/unified/node/1/16.687.1.0.0.0

opc/unified/node:Value/1/16.687.1.0.0.0

opc/unified/node:Pretty/1/16.687.1.0.0.0

opc/unified/path/Tags/HMI_Tag_3

opc/oa/node:Value/2/ExampleDP_Float.ExampleDP_Arg1

opc/oa/node:value/2/ExampleDP_Float.ExampleDP_Arg1

opc/oa/node:Json/2/ExampleDP_Float.ExampleDP_Arg1

opc/oa/node:json/2/ExampleDP_Float.ExampleDP_Arg1Example GraphQL Queries:

Here are some Videos/Demos: